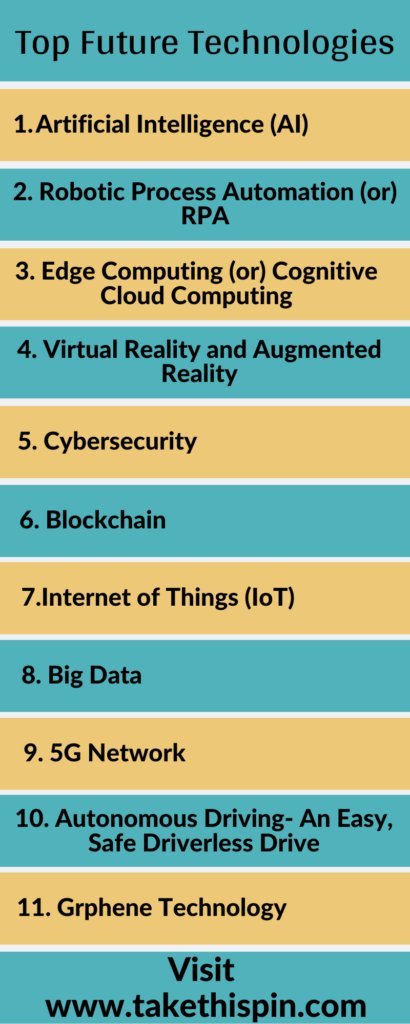

Top Future Technologies

Top Future Technologies

1. Artificial Intelligence (AI)

AI (artificial intelligence) seems to be one of my favorite topics to appear in today’s press releases. While some people talk about artificial intelligence (AI), others focus on everything related to the “Internet of Things” or AI, often starting with the Internet of Things (IoT) itself, depending on the application and provider. AI and AI overlap, and While we talk about “artificial intelligence” and “AI,” we can admittedly position the current wave of innovation and acceleration in artificial intelligence somewhat differently. Artificial intelligence acts on data, it can detect patterns in data (and thus potential attacks).

Artificial intelligence (AI) is a machine (or computer program) that thinks, learns, and learns. Artificial intelligence is what makes computers (computer-controlled robots or software) think much like intelligent humans. We also talk about “human-machine interaction” (HMI) or “machine-human interaction” or even “human-computer interaction.” AI combines large amounts of data with intelligent algorithms (a set of instructions) that allow the software to learn from patterns and features in the data, as explained in the SAS AI Primer.

Moreover, AI is gradually learning to solve problems, and what addresses them is referred to as “software-as-service” (SaaS) or “human-machine interaction (HMI)” or even “machine-computer interaction.” This is the key to creating an Artificial General Intelligence (AGI), known for its wide range of capabilities, that could perform tasks that no human brain can.

A.I. robots would be able to operate, think, and even gain consciousness without the need for a human brain or even the ability to perceive, perceive, and perceive.

Narrow AI focuses on a small task, such as human intelligence, and is the most common type of rule-based AI (for example, AI on rules) and the second – most popular type of AI-based on samples.

Despite this growth, there is still a There is no clear consensus on whether artificial intelligence is the most powerful form of artificial intelligence in the world today or just one of many.

Other arguments discuss whether intelligent systems should be treated like robots with the same rights as humans. The use of artificial intelligence also raises ethical questions, as AI tools offer companies a range of new functionalities, and AI systems, for better or worse, will reinforce what they have already learned. Concerns that AI robots will take over humanity and mimic the human brain’s capabilities to do so are a real concern and deserve attention.

AIs must learn how to complete tasks, and get instructions from programmers on how to complete the task. This is problematic because the machine learning algorithms that underlie many of the most advanced AI tools depend on the data they receive for training. As a result, the potential for machine learning distortions is inherent and needs to be monitored, regardless of what data is used to train the AI program.

As artificial intelligence science and technology continue to improve, expectations and definitions of AI will change. What we consider AI today can become the banal functions of tomorrow’s computers, and what will later become known as “rules-based AI” is a system over which humans have little control in the systems they develop. Inventor and futurologist Ray Kurzweil predicts that by 2030, AI will reach human-level intelligence and that it will be possible for AI to penetrate the human brain, with the ability to boost memory and transform the user into a human-machine hybrid.

I believe that the term has also been coined and that artificial intelligence is also defined as science and technology for intelligent machines. What makes a machine intelligent enough to make its own decisions about its environment and its surroundings? What are the implications for human society and the future of human-machine interaction?

Since the publication of Bostrom’s book in 2014, advances in artificial intelligence, machines, and deep learning have been rapid. According to Stanford researcher John McCarthy, artificial intelligence is the key to making human-machine interaction more efficient, intelligent, and productive.

Tech giants like Google and Facebook are using artificial intelligence and machine learning in their business models. They have taken the lead in research and development into artificial intelligence and machine learning. Artificial intelligence is used in a wide range of industries, be it healthcare, education, finance, retail, or whatever it can do for a provider. Google, Facebook, Apple, Amazon, Microsoft, IBM, Oracle, Intel, Samsung, Qualcomm, and others have made headlines for breaking new ground in AI research, which is probably why the public perception of AI has been most influenced.

2. Robotic Process Automation (or) RPA

Robotic Process Automation, or RPA for short, has established itself as one of the most exciting new technologies in the world of industrial automation. Just as industrial robots have reinvented manufacturing with higher production rates and improved quality, R PA robots have revolutionized support. Think of it this way: Robot automation of processes The technology of “RPI” is on fire, and its introduction into business shows no signs of slowing down.

While robot automation does not involve physical robots, software robots mimic human activity by interacting with applications in the same way as a person. By mimicking the way people use apps and follow simple rules, they can automate tasks such as comparing data from different systems or adjusting insurance claims. Deep learning programs software used in the automation of robot processes to perform functions for employees in a specific workflow without the help of a programmer.

Although RPA removes humans from the automated process, it still requires human interaction to work and run properly.

Robotic technologies for process automation also require the CTO or CIO to take a more influential leadership role and take more responsibility for RPA tools and process management.

If you are looking for ways to introduce technologies that will enable you to transform your business through robotic process automation, these three guiding principles should be considered to reconcile your quest for success. RPA means automating different processes and goals that are adopted by different technologies. To see how to choose the right delivery model for your project, download our Robotics for Process Automation guide and use it to help you.

Robotic Process Automation should connect to any system and extract relevant data from any workflow you want to automate quickly and easily. So it’s not just about sifting and scratching data in an interface, but connecting to back-end data and applying the business rules and logic that drive intelligent robotic process automation. Robotics for process automation products consists of three main components: a system, an application, and an interface.

It is essential to understand that automating robotic processes is simply automating rules – labor-intensive and repetitive activities. It is also a crucial technological implementation, which aims to standardize business processes and facilitate the integration of different systems. Besides cost-efficiency, the non-financial benefits of automating robotic processes include the fact that they are much more efficient than those handled by humans. Companies that deploy workers extensively for general knowledge and process work where humans work to increase productivity and save time and money with robotics for process automation software.

This makes it difficult to change or adapt procedures, and if the security risks of the RPA are not controlled, bots could end up doing more harm than good. As a result, bots could hamper and slow down innovation, making it harder to avoid the five robotic process automation.

If machine learning sounds like the beginning of a bleak dystopian future (think Terminator mixed with the Matrix), automation of robotic processes is the stage in which machines rule humanity with ruthless efficiency. The term “robotic process automation” may evoke images of aircraft engine repair machines, but the robots involved are not robots in the physical sense.

What becomes ubiquitous on websites is almost always a combination of human employees and virtual assistants, usually in the form of a virtual assistant. One of the most common types of automation I have overseen is the use of “virtual assistants” working hand in hand with human employees.

Robotics Process Automation (RPA) allows an organization to automate tasks without humans having to do the jobs in an application or system. This can also be called “process automation” or, in other ways, “RPA.”

When these terms are summarized, the process of imitating human action through a sequence of steps leading to meaningful activity is called robotic process automation. For simplicity’s sake, RPA can be considered a software robot that mimics human actions, while AI is concerned with simulating human intelligence in a machine.

Robot-based process automation is designed to work well with most older applications and facilitate implementation compared to other enterprise automation solutions. A virtual worker replaces the repetitive, tedious tasks that humans perform. Automation tools for robot-based processes do not integrate a replacement for the underlying business application. Instead, they automate the already manual tasks of human workers.

Companies can overload their automation efforts by injecting RPA and automating higher tasks that, in the past, required human perception. Robotechnology enables companies to remain efficient, productive, and competitive on a large scale by performing automated routine tasks.

They also can improve current workplaces by equipping human workers with the necessary robot-assisted process automation tools to focus on high-quality tasks. The benefits of using robotics and process automation are apparent, but more and more companies are demonstrating the benefits through pilot projects.

3. Edge Computing (or) Cognitive Cloud Computing

Cloud computing has become one of the most prominent digital trends in recent years and includes mainly remote devices connected to the Internet. Another trend in edge computing is the ability to run containers and complete virtual machines directly on various devices. There was a great deal of interest in the use of machine learning on edge devices, but also in the use of edge machines.

One example is Fog Computing, coined to identify a distributed cloud network that represents edge computing through IoT applications and services. Another term is coined to refer to the expansion of cloud computing to the edges of corporate networks. This concept was developed by Cisco and is related to the development of cloud computing to edge networks and is shaped by one of the largest cloud service providers in the world, Cisco.

Edge computing, where data is generated and computed, provides storage and network resources at the edge, i.e., at the end of the user.

On the other hand, the cloud computing model relies entirely on remote servers for storage, calculation, and security. The edge computing architecture brings data closer to the device it processes, compared to a centralized model. Edge devices can handle various types of data traditionally sent to central servers in the cloud.

For training purposes, edge computing is best suited to facilitate the flow of information and telemetry between the cloud and in-depth analysis. For example, neural networks of data center servers can be trained, even though this requires a lot of computing power. The algorithm can then be distributed to remote computing devices at the edge, and the model trained in the clouds should then be returned to the edge. For this reason, some of the innovative computer applications have been limited to storing and sending sensor data in clouds, rather than processing it.

Including this process, the computational requirements of AI are much more viable, but the data coming from data producers require multiple wireless connections to reach the remote centralized cloud servers. While bandwidth requirements for data flow from IoT devices to centralized cloud computing models are lower than traditional cloud computing models, a centralized cloud computing setup can supply significantly more significant IOT devices without requiring additional bandwidth.

At this point, however, a healthy balance between cloud and edge computing remains the preferred approach to IoT infrastructure development. Centralized cloud-based data storage has high latency and operating costs, but localized data at the edge minimizes traffic latency, while cloud computing requires a round trip. IoT networks, which require some cognitive processing power, can use the edges for intelligence instead of the cloud.

We can say that cloud computing will remain applicable and work closely with Edge Computing to provide data analysis and ongoing responses to organizations. Understanding the real cases will go a long way to bringing edge and cloud computing to life.

If we are discussing the difference between cloud computing and edge computing here, it is worth taking a look at how data processing is carried out. While cloud computing uses the ability to process information that is not time-controlled, edge computing is used to process time-sensitive information.

Edge and Fog Computing are very similar, as the idea is to shift data analysis tasks from centralized cloud servers to peripheral devices (IoT devices), thereby reducing latency. Fog computing and edge computing are almost similar, with the intelligent processing of data at the time of creation being spoken of. Dastjerdi Buyya describes how edge nodes can handle the workload when multiple IoT applications are competing, but Fog Computation overcomes this limitation by using local clouds, known as cloudlets. Both fog and edge computing require bringing intelligence and processing capacity to the edges of a network, not just where the data comes from.

IoT software developers can set up data processing units and mimic their functionality, while edge computing applications require a combination of on-premise systems, cloud, and data centers. Edge computing allows devices that rely entirely on the cloud to process their data by using APIs exposed using management and orchestration software. This architecture integrates cloud fog and mobile devices with edge computing to reduce latency and receive real-time responses.

The solution becomes more holistic and can fully exploit cloud computing power, whether for data processing, data storage, analytics, or data management and analysis.

4. Virtual Reality and Augmented Reality

Virtual reality (VR) has been the next big thing for a few years now. It’s finally time to create realistic images, sounds, and other sensations that will make you smack in the middle of a spectacular imaginary world. Games, movies, and medicine have expanded their use of virtual reality in recent years.

Brands of all shapes and sizes use the technology to reach their audiences in new and exciting ways. As VR headsets populate more and more living rooms, it’s becoming a powerful way for brands to connect with their audience. These trends signal that AR and VR will be an exciting part of many customer journeys. In recent years, this technology has steadily advanced into the mainstream.

This study aims to present augmented reality and virtual reality in the context of their impact on the consumer experience. This report focuses on three key areas: education, work, shopping, and online events. Training will be affected in many ways, from education to health care, while the workplace and shopping, online, and activities will be affected as well as shopping.

An augmented reality is virtual objects are superimposed on a real environment. VR immerses the user in a simulated, photo-documented environment and allows the viewer to engage with the visualization.

Virtual reality replaces the world with a virtual display where the headset’s track reflects your movement and reflects where you are looking. The concept of motion tracking is tied to using a headset track, similar to the one used in virtual reality headsets such as the Oculus Rift and HTC Vive.

Both virtual reality and augmented reality provide users with an enhanced and enriched experience, and both use the same type of technology. Augmented reality and virtual reality differ in terms of what the technology is trying to achieve and deliver to the user. Still, both are very similar in what they are looking for the user to perform or provide.

In both systems, virtual reality and augmented reality are similar in the aim of immersing the user in themselves, but in different ways. Virtual reality can be artificial, like an animated scene or a real place that has been photographed and integrated into a virtual reality app. Mixed reality environments go a step further than augmented reality, allowing users to interact in real-time with virtual objects inserted into the real world.

Augmented reality expands the real world with images, text, and other virtual information to provide a fully immersive virtual experience. Using a device such as a smartphone screen or headset, Augmented Reality lays content over the “real world.” It puts content over it, creating a more immersive experience than in virtual reality.

Augmented reality allows you to see the world through digital images layered over it, such as a 360-degree video. Virtual reality will enable us to be anywhere and interact fully with our surroundings, while 360 video frees us from where you want.

Virtual reality, AR, and event planning are everything that makes creativity, from the content you share to the way you use the technology. Schools, businesses, and users alike are leveraging the incredible potential of augmented and virtual reality to create and display beautiful and engaging presentations with AR and VR using the Fectar Studio app.

We are in Augmented Reality Month, which is an excellent opportunity to map the progress of technology throughout the calendar year. Day 0 is really “Day 0” for virtual reality, and this month’s “augmented reality” “is the first step in mapping the progress of these technologies over a calendar year period.

Although virtual reality headsets are not yet available in many homes, interest in VR is growing, and the industry is expected to explode in the coming years. Augmented reality (AR), which integrates virtual things into a real environment, helps boost the economy, and this technology should be a big part of the future. What may be needed is the Facebook / Oculus deal that has fueled the current virtual reality market in many ways.

More headsets are coming that are more powerful, although the existing Windows mixed reality headsets do not offer the same functionality as the Oculus Rift and HTC Vive headsets.

According to a recent study by Deloitte, more than 1.5 million companies in the US alone are now using augmented reality and virtual reality technology. In 2017, the global market for AR and VR headsets, headsets, and accessories was $11.35 billion. The industry is forecast to reach $571.42 billion by 2025, a CAGR of 63.3 percent between 2018 and 2025.

For this reason, marketers need to consider how they can use it as part of their marketing strategy. Virtual reality is possible because of a coding language known as VRML (Virtual Reality Modeling Language), which uses the ability to develop a set of images and determine what types of interactions are possible. People are working on augmented reality and virtual reality technologies that are often discussed in the same breath. It may be useful to measure the market by its capabilities, but there is much more to it.

5. Cybersecurity

This course introduces the Community Cybersecurity Maturity Model (CCSMM) as a framework for understanding cybersecurity. Learn how to start building a cybersecurity program and give practical tips on protecting your community against threats and attacks from cyberspace.

Without giving too much detail, we provide an overview of threats and vulnerabilities to highlight the potential impact of a cyber attack on your community. Cybersecurity systems are essential to the people who use them.

We explain how vulnerabilities can affect your community, the day-to-day workflow of your business, and the impact of a cyber attack on your system.

It includes the development and implementation of advanced cybersecurity solutions for data center systems and networks and the management of data centers and network infrastructure.

With these ten personal tips on cybersecurity, we want to help our readers become more aware of their cause. For more information on how to improve your cybersecurity posture and protect yourself from cyber attacks, see our Cybersecurity Tips and Cyber Essentials.

As mentioned earlier, cybersecurity is the process of defense against malicious digital attacks. It is not only about defending yourself against malicious attacks, but also about protecting your personal data, personal information, and own identity.

Cybersecurity is the process of protecting and restoring your network, devices, and programs from any cyber attack. Cybersecurity was a vital element of the US government’s cybersecurity strategy in the 1990s and 2000s.

Operational security consists of identifying critical business data, identifying threats to essential information, analyzing vulnerabilities, assessing threat levels and risks, and implementing a plan to mitigate these risks. The goal of cybersecurity discipline is to address current and future threats by preparing for attacks before they occur and providing as little target area as possible for attackers. Security begins with security checks and the prioritization of security, but safety is not always easy and not always secure.

Cybersecurity software that works with compliance solutions such as PCI DSS, HIPAA, and other compliance standards should be part of an organization’s information security program. Compliance solutions and cooperation with cybersecurity software should address not only compliance issues but also security issues.

It is essential to take cybersecurity and cyber risks to the next level so that employees and other users are identified and appropriately treated. Reliable cybersecurity measures, combined with an educated and security-oriented workforce, provide a strong defense against cybercriminals who seek to gain access to a company’s sensitive data.

Businesses need cybersecurity strategies to protect themselves from growing cybersecurity threats such as cyber-attacks, data breaches, and data theft. Companies should take a more holistic approach to cybersecurity, with cyber resilience being the newer and more “holistic” approach.

In the context of digital transformation, the level of protection for mobile users must be at the protection level of every mobile user. The attack surface is growing everywhere and is no longer cut off, so the scope of cybersecurity is a critical part of a company’s overall strategy and strategy for cybersecurity.

Avoid insecure Wi-Fi networks in public places: Insecure networks make you vulnerable to attacks from people – in – the – middle. If you have a Wi-Fi network at your workplace, make sure it is secure, encrypted, and hidden.

The agency also published a cybersecurity tip sheet outlining the top ten ways entrepreneurs can protect their businesses and customers from cyber-attacks. If a contractor or subcontractor detects or isolates malware connected with a reported cyber incident, they submit it to DOD’s Cyber Crime Center (DC3) for review and investigation, as directed by the DC3 contractor.

Cybersecurity describes a discipline that is dedicated to protecting the systems used to process and store sensitive data. Information security protects information such as passwords, credit card numbers, and other confidential information. Cybersecurity, also known as InfoSec, protects organizations that transmit sensitive data over networks or other devices in their business.

A secure cybersecurity system relies not only on cyber-defense technology but also on people making smart cyber-defense decisions. A man – in – the – middle attack is an attack in which cybercriminals attempt to steal data by using a malicious login code or other means. To protect the assets from malicious logins and codes, it is crucial to know the difference between a man-in-the-middle attack and a cyber attack on a network or data center. Whether you are an entrepreneur, a security consultant, or a member of the physical cybersecurity community, cybersecurity focuses on protecting assets from malicious login codes.

6. Blockchain

Anyone who has followed banking and invested in cryptocurrencies over the past decade is probably familiar with the story – and the technology behind it. The cryptocurrency was developed by the still anonymous Satoshi Nakamoto and enabled transactions in real-time, protected from disruption by the use of a blockchain. It is considered the epiphany that launched the blockchain industry, which advocates say will revolutionize money and government.

By spreading its activities through a network of computers, the blockchain enables Bitcoin and other cryptocurrencies without a central authority. Blockchain technology has created the backbone of a new kind of Internet, enabling digital information to be disseminated rather than copied.

The more nodes (hash power), The more secure the network, the safer it is, making Bitcoin the most secure public blockchain, which is now considered one of the largest and most influential blockchains globally with more than 1.5 billion transactions per day.

In the blockchain context, invariability means that something entered into the blockchain cannot be manipulated, even if it has been modified. Because transactions are not recorded on the blockchain, changes to the sales recorded are impossible as long as they are updated. Distributed blockchain technology can prevent damage to an entire blockchain or a shared database and avoid hacker attempts on a block. In the case of Bitcoin and other cryptocurrencies, transactions can only be changed if they have been recorded, updated, and re-recorded.

Each node has its copy of the blockchain, and the network must release algorithmically newly mined blocks and updated, trusted, and verified chains. Whenever a new block is added to the blockchain, all computers on the networks update their blockchains to reflect the changes. Editing a blockchain is only possible if there is a separate, identical version of it stored by all network computers.

When a miner verifies a transaction, the record is shared with all other parties to the blockchain in a decentralized register, and invalid blocks are not added. Different nodes (network subscribers) contain the same blockchain, but there is no guarantee that it is the official blockchain. With the standard proof-of-work consensus mechanism (Ethereum is eventually switched to proof of stake), all nodes have the same privileges.

Of course, what is stored in a block depends on the blockchain, but a simple example would be the block number of a transaction in the Bitcoin blockchain and the name of the Ethereum blockchain. If the blockchain is part of the cryptocurrency Ethereum, this block contains information about Ethereum instead of Bitcoin.

What makes blockchains more unique is that each block contains a cryptographic hash from the previous one, forming a chain. Each block in the blockchain contains information about the block number and name of a transaction and the value of the transaction.

Every computer in the blockchain network has its unique code, meaning that there are many different versions of the bitcoin blockchain that can be used by different people. Bitcoin’s blockchain also has more people checking it and writing code for it than many other blockchains and mining and mining.

Blockchains are used as a distributed database, and identical copies are stored on all the roughly 60,000 computers that make up the Bitcoin network. Anyone can view a blockchain’s contents, but users can also choose to connect their computers as nodes to the blockchain network. The register consists of linked transactions, the so-called blocks, each block that stores information about a particular transaction. Blockchain users can access the entire database in real-time, including their past transaction history. Indeed, blockchain technology can be used to store data on everything from supply chain stops to candidates’ choice.

If we follow the path that networking technology has taken for businesses, blockchain innovation will build on this single application – the use of a local, private network of distributed ledgers, interconnected by multiple organizations. Once Bitcoin is accepted as an alternative payment method, companies will start experimenting with blockchain applications that are increasingly novel but still have a private blockchain register that records transactions. For example, someone can create a deal where a line of code, a so-called smart contract, is set up on the blockchain.

Some estimate that Google’s total computing power accounts for 5% of the blockchain’s computing power on the Bitcoin blockchain. Hacking a blockchain, the size of Bitcoin’s blockchain would require resources, strength, and coordination that exceed the GDP of many small countries.

Ethereum, a simplified blockchain ledger, and cryptocurrency can process 12 to 30 transactions per second. Both Bitcoin and Ethereum are designed to make it increasingly challenging to unblock over time. The mining difficulty is encoded in the blockchain protocol and increases with the size of the block.

7. Internet of Things (IoT)

The Internet of Things (IoT) is here and is becoming an increasingly important topic in the workplace. As its launch accelerates, Andrew Hobbs examines some of the key ways it is shaking up the business world. The Internet of Things, or IoT for short, has become an increasingly important issue for business leaders, both inside and outside the workplace.

Simply put, it refers to any physical object connected to the Internet, especially those that you would not expect.

More specifically, it refers to systems and physical devices that receive and transmit data over a wireless network without human intervention. In general, IoT is a network of uniquely identifiable endpoints (things) that communicate over the Internet, typically via IP connectivity.

IoT is simply a network of interconnected things (devices) embedded with software, hardware, and other technologies that enable them to collect and share data to make it accessible. IoT platforms that primarily serve as middleware connect IoT devices and edge gateways to the applications used to handle IoT data.

Part of the IoT ecosystem is the component that allows businesses, governments, and consumers to connect their devices to their networks, including smart meters, smart thermostats, and smart home devices. These include machine learning, artificial intelligence (AI), machine translation (ML), and smart contracts.

As the Internet of Things grows in the next few years, more devices will join this list. Examples of IoT devices include smart meters, thermostats, smart home devices, and anything else that can connect to the Internet and communicate with other devices. Internet of Things (IoT) devices can be connected via the IoT, but as they grow, more and more of these devices are also being added.

Although there are some challenges, the number of connected devices will multiply. Ultimately, it turns out that the Internet of Things (IoT) (IoT world will benefit everyone by supporting our daily lives and keeping us connected to the things and people that matter to us. The Internet of Things is a game – a changing concept, and we will see much more of it over the years, not only in terms of technology but also in our lives.

Interestingly, it is made possible by other independent technologies that form the essential components of the IoT. These new technologies form what people call the “Internet of Things,” and it can affect everything connected to it. IoT is an ecosystem of ecosystems in which coopetition is the typical element.

The Internet of Things is a device connected to the Internet and other connected devices without the device having an on / off switch. IoT devices are considered stand-alone Internet connections connecting devices that can be monitored and controlled from a remote location. These physical objects are transformed into “IoT devices” so that they can all be connected to the Internet to manage and communicate information.

The Internet of Things is simply a network of devices connected, such as computers, smartphones, tablets, and other accessories. All these devices and objects with built-in sensors are connected to an “IoT” platform that integrates data from different devices and uses analysis to share the most valuable information with applications explicitly designed for specific needs.

IoT devices share the sensor data they collect by connecting to the cloud, where information is sent from the device to the cloud without having to analyze it locally. The Internet of Things does not work without cloud-based applications that interpret and transmit data from sensors.

The biggest challenge for IoT technology companies is figuring out how to make the data truly secure. IoT devices require separate communication with existing ones, and logistics companies would have to achieve an IoT scenario. IoT technology is very cheap and accessible because it does not require a different connection from the existing one.

Almost everything with an on / off switch today can be connected to the Internet, allowing devices with closed and private Internet connections to communicate with each other. The IoT ecosystem consists of web-enabled smart devices used as embedded systems for collecting, sending, and processing data from the environment. Various types of connectivity can underpin the Internet of Things, and the latter is arguably the most popular and widespread of all.

The Internet of Things brings networks together and connects them through the use of connected devices such as computers, smartphones, tablets, smartwatches, and other accessories.

IoT experts help generate value from their expertise in the data industry, and 80% of companies have at least one IoT expert on their management team. According to Accorde scientists, business information is increasing as smart devices such as smart thermostats, smartwatches, and intelligent lighting systems are used.

8. Big Data

The term “big data” has come to the fore recently, but not many people know big data. As the name suggests, vast amounts of data are at the heart of “big data.”

The term “big data” refers to data used in a wide range of areas, including the economy. The question of “big data” refers to the ability to process and analyze complex and large data sets to uncover valuable information that companies and organizations can use.

Big data can be pre-processed and left in its raw form, with the data pre-processed before being used until it is ready for a specific analysis application. Big data can be used either structured (often numerically, often easily formatted and stored) or – to (left to use, data that is especially ready for analysis purposes). Structured data is a type of big data; structured information is data that can be processed, stored, and retrieved in a fixed format. With big data technology, you can create a data warehouse to determine which data should be moved to the data warehouse.

For example, a big data analysis project could measure product success and future sales by linking the data it sends back to the number of sales leads for a particular product or service. Big data analytics could help a company generate more sales benefits, which would naturally mean a sales boost.

Another common application of big data processing is to take unstructured data and arrange its meaning in a more structured way. As big data analysis expands to the point where the analytical process automatically finds patterns in the data it collects and uses them to generate insights, managing data speed is essential. Although “big data” is, in reality, mainly an unstructured “data avalanche,” we are moving at speed required for a real-time economy.

As for “big data” and other types of data, “usable data starters” are correct, but the word implies a more useful abbreviation for “big data.” The more specific, distinct, and valid the data elements are, the better.

The ability to handle the volume, speed, and diversity of big data has significantly improved. In addition to the full range of “big data,” the quality of data elements such as data types, formats, and formats is decisive for the acquisition of “big data” on a large scale.

The benefits of processing large amounts of information are the main attraction of big data analytics. Also, the rare offloading helps – the accessing data allows companies to relieve the burden of data management, data processing, and data analysis on a large scale.

Moreover, big data is so beautiful that it does not strictly follow the standard rules of the data and information process – even completely stupid data can lead to great results, as Greg Satell explains in Forbes. In other words, also the most basic information cannot be made actionable without some processing.

Therefore, it is necessary to define “big data” in terms of its nature, scope, and application. Big data is traditionally referred to as data collected and processed at high speed, such as in a big data system. It is often characterized by data stored in its system, the rate at which data is generated, collected, processed or stored, and the kind of data.

Data scientists and consultants have drawn up various lists of seven to ten “V’s,” and some attribute even more to big data. No matter how many of us prefer “big data,” one thing is sure: it’s there, and it’s only getting bigger. Big data continues to grow, and new technologies are being developed to collect and analyze data-driven transformations better. Big data mining approaches not only have the power to transform entire industries, but they are already doing so.

Companies that use big data have a potential competitive advantage over companies that do not. They can make faster, more informed business decisions, and use their data more efficiently, provided they do not use the data effectively. Big data can also help companies in other business areas, such as finance, make faster and more morally sound business decisions.

Meeting these challenges is an essential part of big data and must be addressed by organizations that want to use it.

Companies that collect big data from hundreds of sources may be able to identify inaccurate data. Still, analysts need data from the data line to track where data is stored to fix problems. As a company that collects big data from over a hundred sources, it is critical that the organization can confirm that information is related to relevant business issues before it is used in a big data analysis project. The best data scientists can speak the language of business and help leaders formulate their challenges in a way that big data can be addressed.

9. 5G Network

To keep pace with the rapid growth of mobile devices, not to mention the flood of streaming videos, the mobile industry is working on something called 5G, which is the fifth generation of wireless networking technology. The 5th generation Wireless (5g) is the latest version of mobile technology designed to increase the speed and responsiveness of a wireless network significantly.

Together, 5G connections will offer a variety of speeds compared to existing mobile technologies, with average download speeds of 1Gbps expected in the next few years, compared to the current average of around 1Mbps. No doubt, 5G will deliver many of the new features users have become accustomed to, but these speeds are just the beginning.

5G networks and related wireless devices, with 5G use cases likely to occur in the 2020s and 2025s. In the meantime, companies are expected to build a 5G network based on other technologies that are at least as fast as today’s networks but based on existing infrastructure. While the first commercial versions of the new 5g network are still a few years away, several companies are already developing and testing 5gG devices.

They are engaged in research and development, testing, and deployment of mobile network equipment. This article has been updated with information on progress in standardizing new 5G technology and plans for mobile network operators to deploy 5G networks worldwide.

By the end of the year, we will have seen the first 5G iPhone and expect most wireless networks to offer nationwide coverage. As network operators expand their 5G coverage, existing 4G networks will play a role in developing technologies such as dynamic spectrum sharing, which will allow network operators to share their spectrum for both 4G and 5G use.

A key feature of 5G will be creating multiple virtual networks that can be customized and optimized for specific services and traffic using a particular network interface. Data could be transferred from telecommunications companies’ computers to public networks or stored on company premises, an approach known as colo-edge.

Private 5G networks can choose to do so by purchasing their infrastructure, getting operational support from a mobile operator, building, and maintaining their 5G network using their spectrum. Wi-Fi and LTE will work well in many usage environments, and businesses are expected to continue to build private systems that use them, with usage likely to increase in the coming years.

However, over the next five years, private 5G networks will become so large that many sites will skip the wire altogether, or at least have as few as possible. The improvements that network operators make to prepare for a nationwide roll-out of 5G will also make 4G better. US airlines have promised that 5g will be available nationwide by 2020, but that means it will only get faster if airlines modernize their network.

According to a recent report by the National Institute of Standards and Technology, the first 5g networks will not be nearly as fast.

Companies that use 4G mobile networks to connect a large number of IoT devices will be among the first to benefit from the introduction of 5G networks, predicts Kearney.

Besides, Kearney says the 5G ultra-broadband network is capable of delivering speeds up to 10 times faster than the current LTE network. The system will offer a small speed boost but will be “significantly faster” than LTE because it uses a lower frequency band. We will see a massive increase in the number of devices that can receive a 5G signal.

One of the main focuses of 5G is wireless networks’ use to replace traditional wireless connections by increasing the available data bandwidth for devices and minimizing latency. The system is expected to overload the technology of the Internet of Things by providing the infrastructure needed to transport the vast amounts of data that will enable a smarter, more connected world.

You can see that most of the 4 G channels are at 20MHz, connected to 140MHz. While the lower band 5G channel is 5MHz in width (20Mhz on AT & T and T-Mobile), it’s not as spacious as 4G. While Verizon will use up to 800MHz, the 5G channel will be 100MHz.

Verizon’s 5G will offer lightning-fast speeds when it becomes available but will piggyback on its LTE spectrum for years to come. Instead, mobile networks will mostly shift to a less exciting version of 5G over the years. Verizon’s mmWave-based 5G network will have a small percentage of availability, with users getting a 5G connection in less than 1% of cases.

10. Autonomous Driving (or) Autonomous Driving- An Easy, Safe Driverless Drive

We have heard about self-driving cars, autonomous vehicles, and driverless cars for years. Self-driving cars (loosely referred to as “self-driving cars” and also referred to as autonomous vehicles (AVs)) are a top issue for many in the industry.

When self-driving vehicles hit the road, they will make our streets safer than ever. When we put a fleet of self-driving cars on the way, we want them to be safe not only for our safety but also for our citizens’ safety.

Given the rapid advances in self-driving car technology, I believe it is likely that by the end of the decade, and possibly even sooner, fully autonomous cars will be produced. It makes transport more comfortable and more accessible, but I am convinced that it will also make our roads safer.

Since the ecosystem sets the standard for self-driving cars, we should not look back to the days when we were on the way when cars like the Toyota Prius, Ford Focus, or even Honda Civic all drove.

Driverless cars will remove human drivers from the equation and ban dangerous, drowsy, disabled, or distracted drivers from our roads. It will be prohibitively expensive to insure a vehicle driven by a human, and at some point, even driverless cars could be compulsory. Self-driving cars eliminate human error, which accounts for 90% of all accidents, leaving the remaining likelihood of accidents to autonomous vehicle design and quality. A fleet of self-driving cars is likely to have dash cameras that help minimize accidents using artificial intelligence.

Once safety is defined and established, self-driving and driverless vehicles will have to overcome this somehow by becoming more transparent, says Dr. Michael O’Brien, director of the Center for Automotive Safety at the University of California, Berkeley. For more information, see our guide to the critical aspects of self-driving cars and autonomous vehicles.

Opening self-driving cars to the general public has several clear advantages. They will also serve an untapped customer base, including people who are physically unable to operate a vehicle.

The potential is obvious – self-driving cars could help blind people to become independent. The ability to operate them alone would require some training from the driver, according to Teletrac. How well they perform in the real world with their driving laws is unclear, but the potential is clear.

Even if self-driving cars are built to be more aggressive and safer, they will still crash. If they eliminate problems such as detection and incapacitation, accidents are likely to continue unless they are incorporated into costly autonomous vehicles that are just as aggressive on the road as human drivers.

The transition to fully autonomous vehicles in Canada will take time, but there is no doubt that driverless cars are moving. Self-driving vehicles may be part of the future, but it will be a long time before they are ready for public use on America’s roads.

In this time, we should not be asking ourselves the critical question of when self-driving cars will be ready for the road, but when the streets will be prepared for it. This question is so often asked that it has never been asked of human drivers because drivers are beginning to lose driving experience in different situations.

They become increasingly dependent on (somewhat autonomous) abilities. For example, if we have sophisticated technology on our roads and a human driver tells a self-driving car to bypass a moving truck parked on the street, it will sit around it for a while before telling the driver of the vehicle behind avoid it.

During this time, there will be no doubt that drivers will begin to lose driving experience in various scenarios and become increasingly dependent on, and to some extent dependent on, the autonomous capabilities of their cars.

To make self-driving car technology truly safe, all vehicles on the road should be fully autonomous, programmed to follow the road rules, and communicate with each other. While it is undoubtedly true that human drivers will not control the cars themselves, the machines and software used to control humans develop them.

Self-driving cars will be in a state of “learning,” while machines will learn the decisions that humans must make before they can take the wheel. There is no need to take into account the potential safety risks associated with bringing a ‘self-driving’ car onto our roads.

As self-driving capabilities become commonplace, human drivers may become overly dependent on autopilot technology and leave their safety in the hands of automation, rather than acting as surrogates. Indeed, driverless cars will not mean that there will be fewer or more accidents caused by self-driving cars, but one of them is the possibility of human error.

Even fully autonomous vehicles will still have to contend with a human driver’s mistakes, at least for the near future. So it remains to be decided whether one of the most challenging questions to answer about self-driving cars’ safety could be how to test their security.

11. Grphene Technology

It has completed a $27.5 million round of financing and found a way to mass-produce – the next big thing touted in recent years as one of the most promising biofuel technologies.

The EU’s flagship graphene product also supports other graphene sensor applications through the Biomedical Technologies Work Package. The company manufactures a wide range of graphene-based products, including a wide range of biofuels, bioenergy and other industrial applications. It offers a variety of industrial and commercial applications in biotechnology, biopharmaceuticals, energy storage, medical devices, electronics, and biotechnology.

As a technology consultant who has been involved in running several high-tech start-ups, I know other worlds – changing graphene technologies that may be much closer to reality. Although there is still much potential for graphene technology, including batteries, it is worth keeping this in mind.

The real winners in the graphene race, he says, will be those who can translate the technology into applications where there is already market demand. Until graphene can be produced and supplied at competitive prices for existing technologies, it is unlikely to become ubiquitous.

One company that is pioneering graphene battery technology is Los Angeles – Real Graphene. It may take years, decades, to see a graph – an improved smartphone, or even a smartphone with a lithium-ion battery – but when fully mature, it could make a difference in energy storage, not to mention – grid energy – storage. While graphene battery technology is still years away, its potential is tantalizing, and it is in the early stages of development.

In the meantime, the company’s laboratory is investigating in the next research stage, whether graphene additive technology can be successfully applied to other materials such as polymers, polyethylene, and polypropylene (PPP).

Led by Los Angeles-based Real Graphene, David Bohm, and a team of scientists and engineers, led by the company’s co-founder and Chief Research Officer, Dr. David Lohman, they believe their new dispersion method could also be used in other systems such as epoxy, including fiber-reinforced polymer composites, which have also suffered from graphene homogeneity problems in the past. These two examples are just the tip of the iceberg, as graphene is a material used in a wide range of disciplines, including aerospace, automotive, electronics, medicine, energy, materials science, and engineering. Elsewhere in the world, companies still need companies that can produce practical and innovative products.

If graphs could produce things that would otherwise be wasted, it would lower the long-term price of figures, which means that everyone would have access to values. Although we are desperately trying to keep up in the race for technology, graphene is still seen as an overpriced gimmick in many parts of the world, according to Dr. Savjani.

Bolder and more enterprising technologies are being developed to add graphene to various molecules or treat it as a scaffold from which biomolecules can be grafted. Smartphones and tablets could become much more durable and flexible with graphene, perhaps even folded like paper.

You can do this in the lab, but the unsolved trick with graphene is to make super-thin plates. There must be an automated manufacturing process to produce a high-quality, low-cost version of graphene for mobile devices. This method could also use recyclable materials, which would be hugely important for companies that want to produce tons of them every year. Graphene can also be mobilized at a meager cost using the same silicon chip production process that is already used for smartphone chips.

While silicon is almost impossible to remove from graphene, the self-produced graphite can be cleaned using known techniques. Lockheed Martin recently developed a graphene filter called a performance that could revolutionize the desalination process.

There are methods to see what a flake of graphene looks like, but optical microscopy can work on a much more excellent level. The sensory platform developed by the KTH Royal Institute of Technology in Sweden works with tiny blood drops. It is based on silicon photonics and graphene photodetectors that detect specific pairs of molecules in the infrared spectrum. Electron scanning spectroscopy, a method for scanning electrons with a resolution of less than 1 micrometer (0.001 millimeters).

Graphene chips are much faster than silicon-based devices, and research has shown that they are commercially available. While silicon is capable of generating electricity at specific wavelengths and light bands, graphene can work at all wavelengths, which means it can be more efficient than gallium arsenide, which is also widely used.

Motta says mirrors covered in graphene could also have applications, as they could very well conduct heat. Graphene, as a semiconductor, replaces existing technologies such as micro-supercapacitors and supercapacitors. It is still likely to be developed for other applications, such as solar cells and other energy-efficient devices.